Abstract

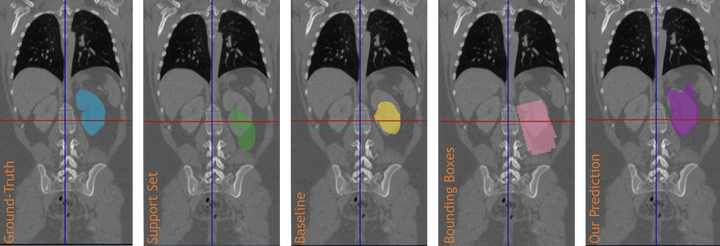

Semantic segmentation is an import task in the medical field to identify the exact extent and orientation of significant structures like organs and pathology. Deep neural networks can perform this task well by leveraging the information from a large well-labeled data-set. This paper aims to present a method that mitigates the necessity of an extensive well-labeled data-set. This method also addresses semi-supervision by enabling segmentation based on bounding box annotations, avoiding the need for full pixel-level annotations. The network presented consists of a single U-Net based unbranched architecture that generates a few-shot segmentation for an unseen human organ using just 4 example annotations of that specific organ. The network is trained by alternately minimizing the nearest neighbor loss for prototype learning and a weighted cross-entropy loss for segmentation learning to perform a fast 3D segmentation with a median score of 54.64%.